- Filebeats cleanup data install#

- Filebeats cleanup data software#

- Filebeats cleanup data code#

- Filebeats cleanup data trial#

Start Filebeat systemctl start filebeatĨ. Validate Metricbeat service is running systemctl status metricbeatħ. Configure beat-expack sudo nano /etc/metricbeat/modules.d/beat-xpack.yml hosts: [“ username: “beats_system” password: “avatar”Ħ. Enable beat-pack if not already enabled sudo metricbeat modules enable beat-xpackĥ. Configure Filebeat sudo /etc/filebeat/filebeat.yml # Live Reloadding reload.enabled: true reload.period: 10s # Setup dashboards : true # Kibana host: “localhost:5601” # elasticsearch output hosts: protocol: “http” username: “elastic” password: “goodwitch” # logging.to_files: true logging.files: path: /var/log/filebeat name: filebeat keepfiles: 7 permissions: 0644 # setup monitoring through metricbeat http.enabled: true http.port: 5067Ĥ.

Filebeats cleanup data install#

Install Filebeat apt-get install filebeatģ. Restart service for changes to take effect sudo systemctl restart elasticsearch sudo systemctl restart kibana sudo systemctl restart metricbeatĢ. To start with out file beat setup, we need to first validate that logging is correctly setup for various components of our labġ.1 Elasticsearch path.logs: /var/log/elasticsearchġ.2.1 configure Kibana logging st: /var/log/kibana : true : 7ġ.2.2 Create log file for Kibana touch /var/log/kibana chown kibana:kibana /var/log/kibanaġ.2.3 configure logging for Metricbeat sudo nano /etc/metricbeat/metricbeat.yml # In the logging section, configure the following logging.to_files: true logging.files: path: /var/log/metricbeat name: metricbeat keepfiles: 7 permissions: 0644ġ.2.

Filebeats cleanup data software#

Minimum software requirements for Filebeat installation on ELK server should already be met as we already have elastic search and Metricbeat installed on this server.

Installation and configuration of Filebeat on ELK Server We’ll use the APT repository method to install Filebeat.

Filebeats cleanup data trial#

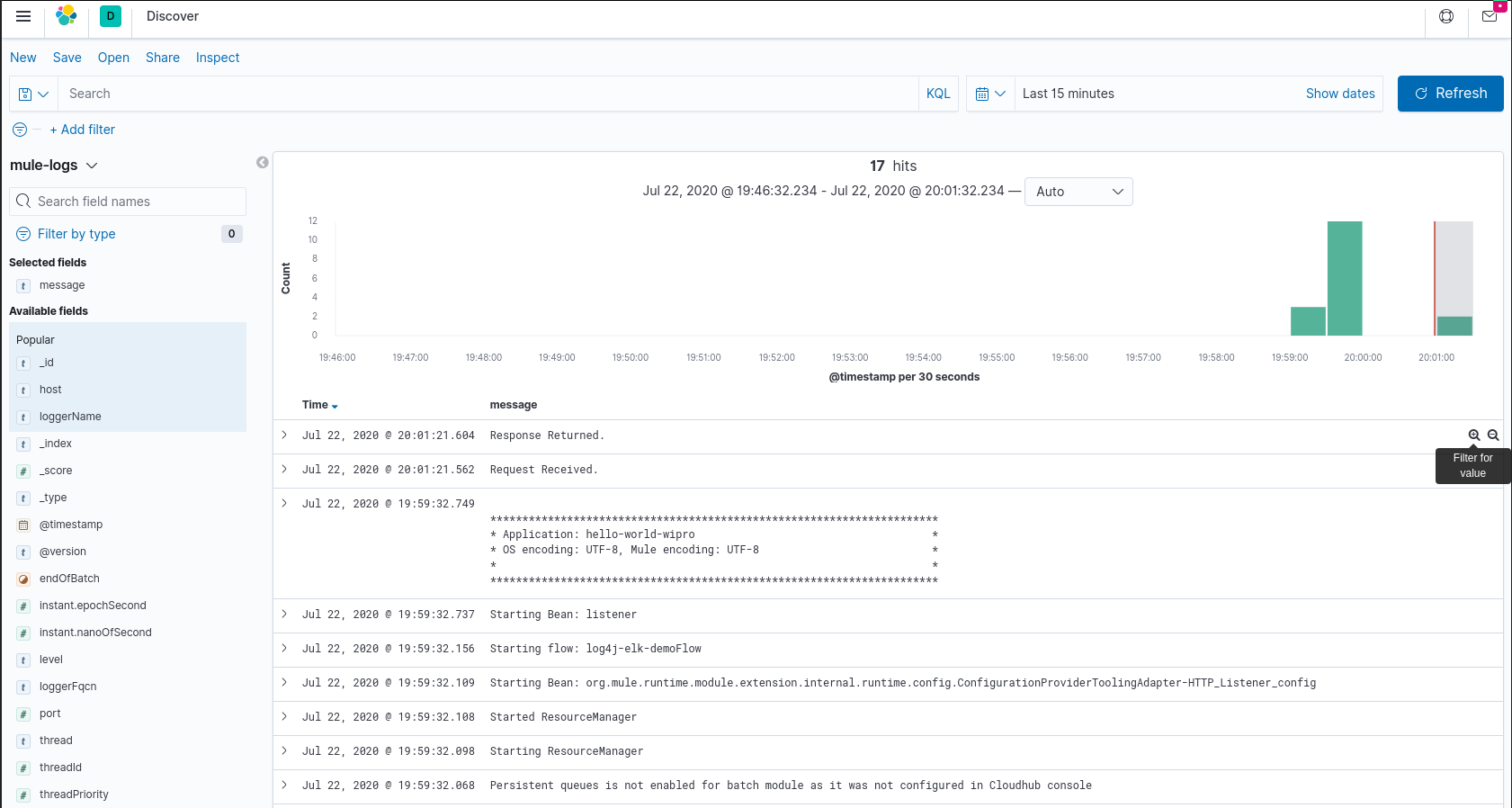

I have used the GCP platform to build my test lab since it offers $300 USD free trial credit but you can do it on your servers or any other public cloud platform as well. In this article, I’ll set up a single-node elastic search cluster( refer to this article) and two apache webservers. Libbeat aggregates the events and sends the aggregated data to the output that you’ve configured for File beat.Each harvester reads a single log for new content and sends the new log data to libbeat,.For each log that Filebeat locates, it starts a harvester.It starts with one or more inputs that look in the locations you’ve specified for log data.Input - An input is responsible for managing the harvesters and finding all sources to read from.The harvester reads each file, line by line, and sends the content to the output.

Filebeats cleanup data code#

As I understood from source code Filebeat do a lot copies of my data and keep it to internal buffers but the memory increase up more than 圆 ~346MB it was size of my example log file. I tried to understand where is the problem and do some debug: For example in one instance for process one file it used 10-12 gb. I have some services that produce a lot logs (~50GB per day) and as I saw for huge log files Filebeat used a lot memory.

0 kommentar(er)

0 kommentar(er)